- AI voice assistants are being integrated into cars for hands-free and intuitive functionality.

- Voice assistants like Google Assistant and Apple Siri can recognize and respond to natural language commands, allowing drivers to interact with their vehicles more effectively.

- Integrating natural voice virtual assistants is complex and requires significant resources and expertise in learning and data collection. As a result, only a few companies can currently do it successfully.

ChatGPT’s popularity has encouraged many people to think about AI’s potential applications. One of them is in the automotive sector. With the simplification of the dashboard in vehicles, there has been a trend towards integrating more functions into the central display, such as navigation, entertainment, climate control and vehicle diagnostics. The central computer in vehicles is becoming more powerful and can do more things. All this allows easier and more user-friendly ways for drivers to interact with their vehicles while enabling more advanced and customizable functions for the vehicle itself.

Also, this has matched the development of software-defined vehicles, which take this integration a step further by using a centralized software architecture to control all vehicle functions. This allows for greater flexibility and the ability to update vehicle systems over the air (OTA).

Also, this has matched the development of software-defined vehicles, which take this integration a step further by using a centralized software architecture to control all vehicle functions. This allows for greater flexibility and the ability to update vehicle systems over the air (OTA).

There has been an increasing demand for additional functions to be integrated into the central display, such as voice assistant, in-car digital assistant, and other advanced driver assistance systems (ADAS). However, oversimplification leads to many problems. Some people still like to use knobs or buttons in the auto cabin, despite the prevalence of touchscreen displays in modern cars. Below are some reasons:

- Tactile feedback: Many people find it more intuitive to use these physical controls than to navigate through a digital menu on a touchscreen display. Knobs and buttons provide physical feedback when they are pressed or turned, which can make it easier to interact with the controls without taking your eyes off the road.

- Visibility: In some cases, knobs and buttons can be easier to see and use in bright sunlight or other challenging light conditions, as they do not suffer from glare or reflections in the same way that a touchscreen display might.

- Safety: Using physical knobs and buttons can be safer than interacting with a touchscreen display, as it allows the driver to keep their hands on the wheel and their eyes on the road.

Therefore, it is crucial to have a simplified human-machine interface (HMI) on the central screen of a car that is user-friendly, reliable and intuitive in order to minimize the learning curve for drivers and enable them to easily and efficiently access the desired features without encountering any errors. The most important of these is the virtual voice assistant.

There are several popular virtual voice assistants available in the market today, like Amazon Alexa, Google Assistant, Apple Siri, Microsoft Cortana, Samsung Bixby, Baidu Duer and Xiaomi Xiao AI. In addition, there are other proprietary virtual voice assistants designed specifically for the automotive industry, such as Cerence, SoundHound Houndify, Harman Ignite and Nuance Dragon Drive.

The majority of these virtual assistants in the automotive industry are created to seamlessly integrate with the vehicle infotainment systems to offer drivers a variety of voice-activated functionalities, including hands-free phone calls, weather updates, music streaming, and voice-activated navigation. Moreover, they are designed to recognize and respond to natural language commands, enabling drivers to engage with their vehicles in a more intuitive and effortless manner. By providing a safe and convenient way to interact with vehicles, these virtual voice assistants allow drivers to keep their hands on the wheel and eyes on the road.

While virtual voice assistants have improved significantly in recent years, there are still some challenges that need to be addressed. Here are some common problems that currently exist with virtual voice assistants:

- Understanding complex commands: Virtual voice assistants may encounter difficulties in comprehending intricate commands or requests that involve several variables or conditions.

- Accents and dialects: Virtual voice assistants may also have difficulty understanding users with different accents or dialects.

- Background noise: Virtual voice assistants can be sensitive to background noise, which can make it difficult for them to understand user commands or requests.

- Privacy concerns: As virtual voice assistants become more ubiquitous, there are growing concerns about the privacy of user data.

- Integration with other automotive systems: Virtual voice assistants may have difficulty integrating with other systems or devices, which can limit their functionality and usefulness.

ChatGPT can speak the natural language and converse like a human because it is a language model that has been trained on a massive amount of text data using a deep-learning technique called transformer architecture. During its training, ChatGPT was exposed to vast amounts of natural language text data, such as books, articles and web pages. This allowed it to learn the patterns and structures of human language, including grammar, vocabulary, syntax and context.

Unlike broad-based training methods, natural language training, such as that offered by ChatGPT, allows for the development of models that are finely tuned to specialized data sets, which may include frequently used vehicle commands or a range of distinct national accents. The model is then fine-tuned by further training it on the large corpus of unlabeled data to improve its language understanding capabilities.

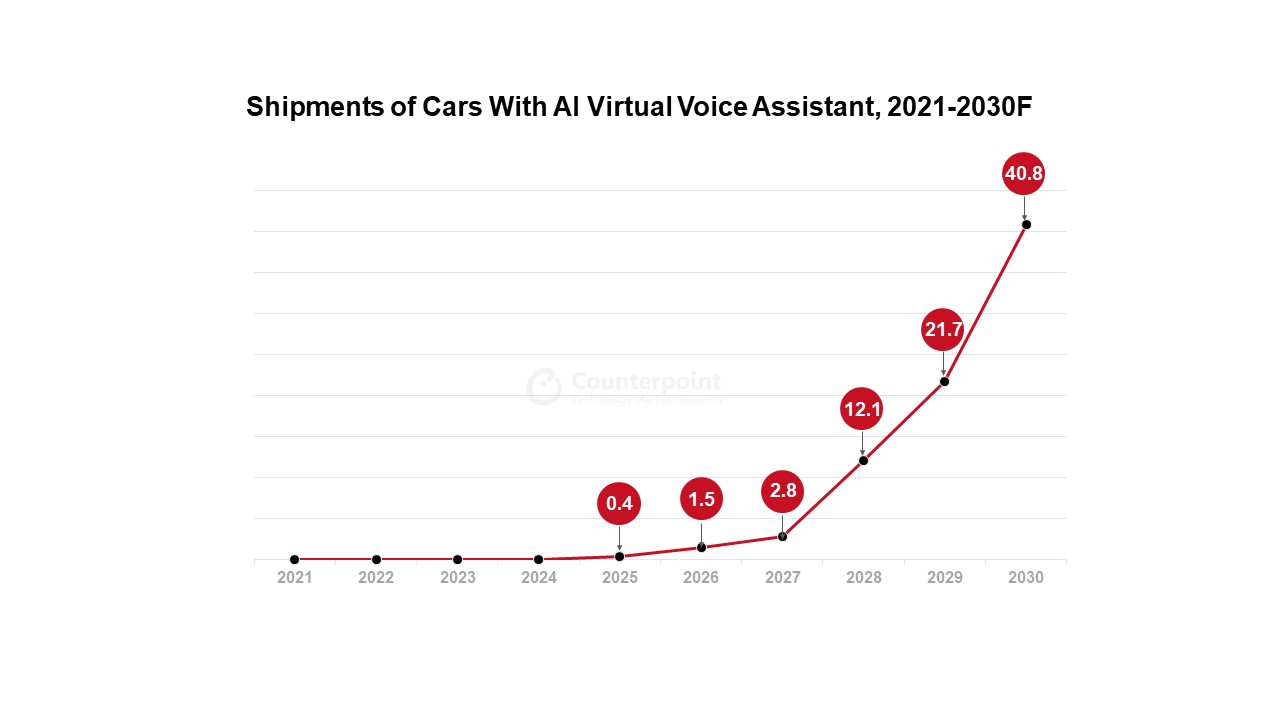

The following figure shows our forecast for the use of intelligent voice control in cars.

Overall, the potential of natural language voice conversation assistants in cars is vast, and with ongoing research and development, we can expect to see more advanced and sophisticated voice assistants in the future. Developing a successful natural language virtual voice assistant for use in cars is a complex and time-consuming process that requires multiple iterations of training and fine-tuning.

Since the development necessitates a considerable amount of data, computational resources and expertise, only a handful of companies such as Microsoft, Tesla, NVIDIA, Qualcomm, Google and Baidu have the resources to undertake this work. The development of the technology is estimated to take three to four years. There will be an increased demand for vehicles above Level 3.

As highlighted in our report “Should Automotive OEMs Get Into Self-driving Chip Production?”, the automotive industry will confront obstacles related to electrification and intelligent technology, necessitating sustained capital investments and support from semiconductor suppliers. Consequently, only a handful of established car manufacturers with considerable economies of scale will be able to finance these initiatives. The growing popularity of natural voice control in cars will only intensify these challenges.

Related Blogs:

- HERE Maintains Location Platform Leadership; TomTom Surpasses Google to Take Second Position; Mapbox Moves to Fourth

- Mercedes Fends off VW in Europe EV Market

- Qualcomm Gears up to Drive Automotive Industry Transformation

- Qualcomm Bolsters Automotive Presence with One More Deal

- ADAS Penetration Crosses 70% in US in H1 2022, Level 2 Share at 46.5%

- LiDAR Now High on Automotive Industry Radar

Related Reports: